Let’s start with a simple example to better understand this section. Assume you go to a big Organization to do office work for the first time and You aren’t familiar with any part of the building.

The managers put some of the people responsible in the front of the entrance for guidance and guarding. In the absence of these guiding personnel, people regularly ask the other employees to help them find the part that they are searching for. in this situation, the order of the organization is completely destroyed.

Related posts: What is Magento?

The robots.txt file in the websites acts like these guiding people, but not for users just for Google robots which Check the different parts in your website for any reason.

What is Robots.txt file and what do they do?

In fact, Robots are software that checks different websites automatically. Your site visitors are not just people, but Google search engine bots are the most important robots that search and visit your site. These robots several times check your site every day and this action may Occur Tens of thousands of times for the big sites.

Each robot does specific work. For example, the most important robot (Googlebot) finds new pages on the internet and downloads them for further review by ranking algorithms. As a result, these bots not only are harmless but they are so useful for the site and help the ranking of your site.

But you should be careful because the bots search every corner of your site and save information in the Google Servers. Finally, they show the information that you do not want to be shown. Fortunately, we can limit the access of the bots to the websites and use the Robots.txt file for such cases.

What is the Robots.txt file?

The Robots.txt file is like a license for robots. The robots read the “robots.txt” file before they review the site. We can determine the access of bots by writing a few simple command lines in this file.

With a few commands, we can specify which pages are allowed for a robot to examine and which pages aren’t. This thing helps your web host server doesn’t get involved with robots and you can optimize your site for SEO.

Why should we have a “Robots.txt” file?

All pages of a website don’t have equal importance. Sometimes most webmasters don’t want their sites to be indexed by search engines. It can have different reasons; the first reason is that their site may doesn’t have useful content.

The next reason may be that they have a big site with a lot of visitors and they don’t want to spend server resources such as bandwidth, and processing power for consecutive visits of robots.

What does a robots.txt file do?

The robots read the “robots.txt” file before they review the site. We can determine the access of bots by writing a few simple command lines in this file. Fortunately, we can limit the access of the bots to the websites and use the robots.txt file for such cases.

The main goal of using robot files is to limit robot requests for excessive visits to web pages.

Can a page be removed from search results by using the “Robots.txt” file?

Before it has been possible to avoid or completely hide a page from Google bots but now it’s not so useful. We used the “Noindex” command in the “Robots.txt” file, to remove a page from search results,

Familiarity with Google bots

Google has a number of crawler robots that automatically scan websites and find pages by following the links from one page to another page.

There is a list of the most important Google bots that you should know:

AdSense: a robot for browsing pages to display related ads

Googlebot Image: a robot that finds and checks images

Googlebot News: a robot to index news sites

Googlebot Video: a robot to review Video

Googlebot: a robot to discover and index web pages. This robot includes two types, desktop, and smartphone.

Each of the bots continuously reviews website pages, you can limit them if you need.

What is the job of crawling robots?

Crawling robots visit your website depending on several factors. If the site has a lot of changes and more new content, Crawling robots check your site more. For example, on news websites, bots browse and index sites more quickly because these sites publish and upload news moment by moment.

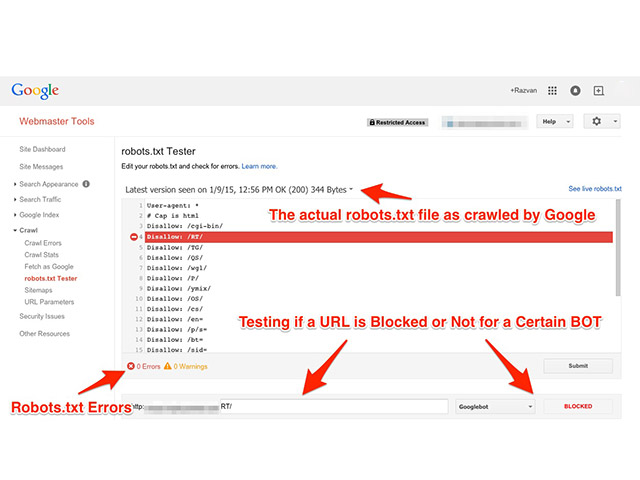

Each website has a part under the name “Crawl Stats” In its search console that shows the number of browsing the site by bots, daily; at this part, you can see the downloaded volume by the bots and the loading time of the pages.

Familiarity with “Robots.txt” file commands and their meanings

In all, we need four commands in the “Robots.txt” file that are so important.

User-agent: To specify the robot that the commands are written for.

Disallow: To specify the parts that the robot can’t browse or check

Allow: To specify the parts that the robot can browse or check

Sitemap: To show the site map file address to bots.

To conclusion

You don’t need to spend a lot of time and check robots.txt files constantly; if you need to take advantage of this feature, it’s best to use the Google Search Console online tool. With this tool, you can manage, edit, debug and update your robots.txt file easily.

Finally, we recommend you avoid, updating this file continuously as much as possible. You should create a complete and final file from robots.txt after making the website.

Frequent updates of this file, while not having much effect on the performance of your site, can complicate the process for crawlers and bots to access your site.